Risk

Why AI is more accurate than humans at probabilistic risk forecasting

WE spend a lot of time thinking about subjectivity, forecasting accuracy, schedule quality, and the way people think about uncertainty and artificial intelligence. nPlan is in the business of forecasting risk on projects, by using machine learning to predict the outcomes of construction schedules. People often ask two questions during the early phases of an engagement with us:

- How can an algorithm be better than an expert at forecasting risk?

- How can your algorithm work on my unique project?

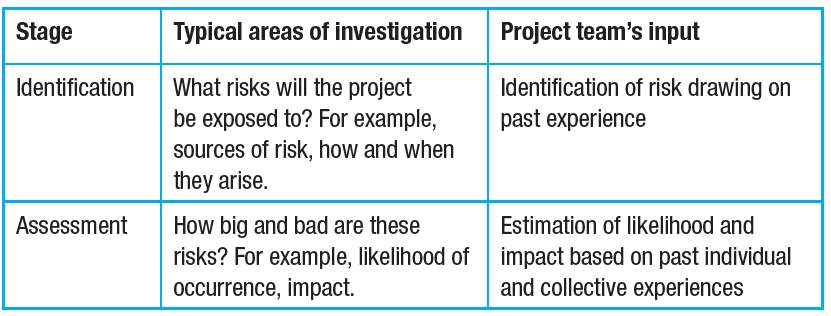

To understand why a machine could outperform project experts, we first need to know how professionals have an impact on a ‘traditional’ risk management process. Today, most professional bodies (including the Institute of Risk Management, ISO, Prince2) will prescribe a risk management process using the following steps:

- Identification

- Evaluation/assessment

- Analysis

- Treatment

- Review (rinse and repeat)

We can start by looking at the team’s role and influence in the first two steps; identification and assessment. This process is pretty much the same everywhere no matter the project size, from the small (£20m) to the mega (£10bn+) schemes. This means that the project teams are potentially making the same mistakes over and over again. We believe this to be one of the reasons why we still see project delays, even though delays in projects have been ‘a thing’ for decades.

The problem is that we are asking humans to identify risks, the likelihood of those risks occurring, and the impact of those risks if they materialise. This creates not just one, but three points of subjectivity for each risk item, and many stirling opportunities to mess up the inputs to a risk analysis: “But I’ve got the best tunnelling team ever, and they are estimating these risks, not guessing them!”

A typical risk assessment process, where specific risk events are identified and subjectively quantified.

Superforecasting

This is probably as good a time as any to mention that humans are terrible at estimating probabilities and risk. In the book Superforecasting, the authors talk at length about several years of research into understanding what makes some people incredibly good at creating forecasts, whilst most are not better than randomly guessing outcomes. We recommend that if you are serious about risk and forecasting, you put this book at the top of your reading pile.

The tl;dr (too long; didn’t read) of it is that it is all about estimating uncertainty, and doing so in a way that is consistent with your own track record of estimation. It turns out that, for example, experts are terrible at this, and are consistently overconfident and ultimately wrong. However, the way we appraise experts makes us vulnerable to confirmation bias, and we ultimately find signal in the noise by praising people for ultimately just guessing right many times in a row.

Uncertainty, forecasting and risk

When we talk about any events in the future, we must consider all three; uncertainty, forecasting and risk. They exist together and are forever entangled.

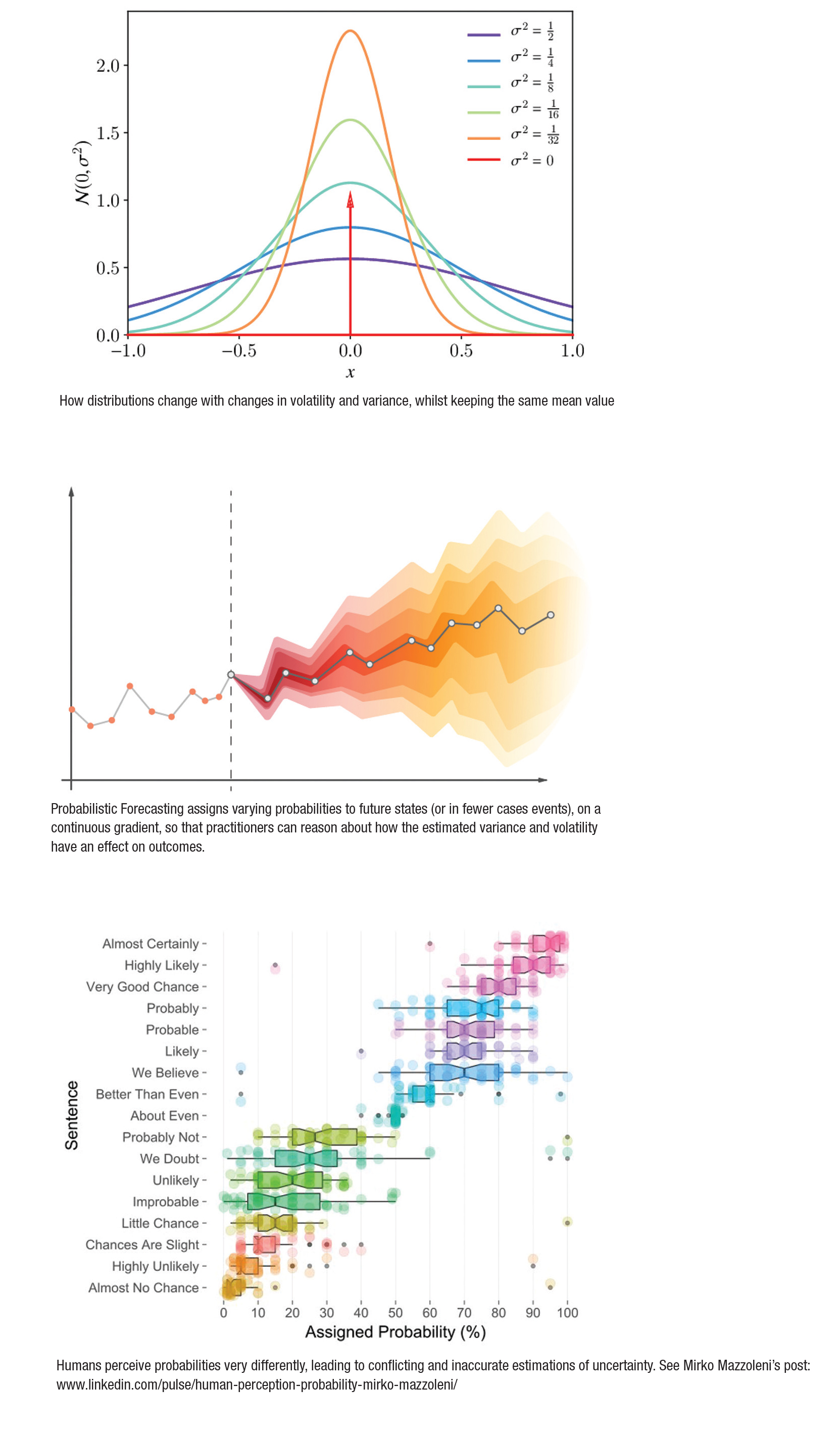

- Risk, defined as broadly as possible, is the potential for deviation from an intended plan or result.

- Forecasting is the act of producing a probabilistic view over any future events.

- Uncertainty is the variability of any outcome, and is a direct consequence of risk. It is also included as a parameter when producing forecasts.

The higher the risk, the higher the uncertainty, the wider the forecasts.